Predicting CJK Character Shapes

Table of Contents

| Github | http://github.com/google/autocjk |

|---|

AutoCJK is a tool for generating low-resolution predictions of uncommon CJK characters, given full-width images of their components.

Example:

Left-to-right: (a) source left-hand component, (b) source right-hand component, (c) expected composition, (d) predicted composition, and (e) (c) / (d) difference.

NB: If you already know about the Chinese language, feel free to skip the Background section and read onwards, starting from AutoCJK below. Also, if you already know about the Chinese language and notice something below is wrong, please open an issue against this repository and let me know!

Background⌗

Chinese Language⌗

The written Chinese language comprises tens of thousands of logograms (汉字; hànzì), each with a (non-unique) pronunciation and meaning. Hanzi are used in many other East Asian scripts. CJK scripts are usually fixed-width, and character proportions and spacings exist on a regular grid.

It is often useful to think of Hanzi as “decomposing” into radicals (部首; bùshǒu), which can be combined to create all other characters. The most common system of radicals is the set of 214 Kangxi radicals (康熙部首; kāngxī bùshǒu). 1

Radical composition⌗

Radicals compose in about a dozen regular ways to form new characters. A new character produced by one of these compositions is often phonetically or semantically linked to one of its components, but this is not always the case.

Two radicals can be composed horizontally (女 + 子 = 好) or vertically (几 + 木 = 朵). 2

Three radicals can be composed horizontally (氵+ 方 + 字 = 游) or vertically (日 + 罒 + 又 = 曼). In the horizontal case, it is common for two adjacent radicals to first combine into another character (方 + 字 = 斿; 氵+ 斿 = 游).

One radical can fully surround another (囗 + 玉 = 国).

One radical can surround another from above, (门 + 日 = 间), from the left (匚 + 矢 = 医), or from below (凵 + 乂 = 凶) (but never from the right).

One radical can surround another from the upper-left (广 + 木 + 床), from the upper-right (丁 + 口 = 可), or from the lower-left (辶 + 文 = 这) (but never from the lower-right).

Finally, two radicals can be overlaid. Overlaid compositions are generally ambiguous: for example: 一 + 木 = 未, but also 一 + 木 = 末. Note the widths of the horizontal strokes in each resultant character.

As can be seen from the 未 vs. 末 distinction above, there is sometimes more than one way to compose the same pair of components. Clearly, composition should be seen as a way to understand complex characters, rather than the way to parse and understand these characters.

Finally, composition is often recursive. Examine the sequence: (乛 + 头 = 买, 十 + 买 = 卖, 讠+ 卖 = 读). Composition depth rarely exceeds four or five levels.

How many Chinese characters are there?⌗

A newspaper often contains between 2,000 and 3,000 unique characters. An educated Chinese person knows around 8,000 characters. A standard Chinese dictionary contains around 20,000 characters. A comprehensive Chinese dictionary contains around 80,000 characters.

Chinese and Unicode⌗

Unicode is an international standard for encoding text. Here, ‘encoding’ means associating each character with a unique number. Unicode is divided into disjoint ranges called “blocks”, which usually group characters by script or by language. New blocks are released over time as the Unicode standard evolves.

In 1992, the Unicode consortium released an initial CJK3 block, titled CJK Unified Ideographs. This block contains around 20,000 code points representing common CJK characters. Unicode continues to release “extension” blocks containing less-common characters; these blocks are named CJK Unified Ideographs Extension A, CJK Unified Ideographs Extension B, etc. and act as supplements to the original 20,000 characters. Later blocks tend to contain CJK characters of increasing rarity.

As of 2020, there are around 93,0004 CJK unified ideographs in Unicode. Around two-thirds of all the code points in Unicode are reserved for CJK characters.5

Kangxi radicals and Unicode⌗

Even though Unicode aims to include most CJK characters, there will always be rare characters such as place names and proper nouns which do not appear in Unicode.

Unicode offers a system called IDS (Ideographic Description Characters) which helps express (but not render) rare CJK characters.

IDS is a set of ideographic description characters. An ideographic description character might represent the act of joining characters horizontally, vertically, etc. These characters can be rendered as dotted-line divisions of a unit square (ex: ⿰, ⿱, ⿲, ⿳, ⿴, …)

A series of glyphs combined by ideographic description characters is known as an ideographic description sequence.

Thus a character like 好 can also be expressed as ⿰女子. This is a useful tool for communicating rare characters, but does not help in actually rendering them.

Why doesn’t the Unicode standard adopt a compositional model for encoding Han ideographs? Wouldn’t that save a large number of code points?⌗

In short, Unicode decided that the burden on font authors and the difficulty of “normalization”, i.e. transforming characters into a normalized form to allow for searching and comparison, was too great. For a more detailed answer, see the original question and answer from the Unicode CJK FAQs.

Ideographic description sequence shaping⌗

Since Unicode prefers individual code points over composition, fonts and the platforms which enable them (Opentype, Truetype, etc.) generally do not implement “ideographic description sequence shaping”, i.e. the process of rendering “⿰女子” as “好” at display time. Instead we rely on Unicode to provide code points for the most common characters, and on font authors to provide renderings for those characters.

Complications of CJK Font Rendering⌗

Since modern text encoding for CJK characters is non-compositional, font authors need to create a rendering for each individual character in question. As noted above, there are (currently) around 93,000 CJK unified ideographs. Due to the number of characters and the difficulty of researching and creating character renderings, most fonts lag behind the latest Unicode releases by several years. 6

Why? CJK font design often tries to faithfully replicate the way natural handwriting works: adjacent strokes are joined, complex radicals take up more space than simple ones, and very complex radicals get simplified. These complications occur naturally in handwriting, but are often difficult to represent algorithmically.

Here are some examples of complications:

Complication 1: Variable-proportion radicals⌗

A character made up of components rarely gives the same amount of space to each component. Often these changes in proportion occur in response to the relative complexity and rarity of the components.

Examples:

When the left component of a character is less dense than the right component, the right component will take up around ⅔ of the horizontal space. (讠+ 吾 = 语).

When the left and right components of a character are of equal density, each component will take up around ½ of the horizontal space (身 + 朵 = 躲).

When the left and right components of a character are the same, each component will take up around ½ of the horizontal space (月 + 月 = 朋).

When the top and bottom components of a character are the same shape, they will often “nest”. (Example: 夕 + 夕 = 多) This helps squash the overall character to better fill out a square.

These are just a few of dozens of rough guidelines, each with exceptions. Radicals can be squashed, stretched, scaled, and translated (although never rotated) during composition.

The exact proportions and placement of radicals is not regular. I personally don’t believe there is an algorithm for deriving them. But, as you will see in the next section, we can approximate one.

Complication 2: Variable-form radicals⌗

Often radicals will change form as well as proportion when composed.

- When 刀 appears on the right, it can be written as刂 (as in 刖). (Exception: 切.)

- When 人 appears on the left, it can be written as 亻(as in 他). (Exception: 从.)

- When 手 appears on the left, it can be written as 扌(as in 扡). (Exception: 拜, or 帮.)

- When 心 appears on the left, it can be written as 忄(as in 快).

- When 水 appears on the left, it can be written as 氵(as in 池).

- When 火 appears on the bottom, it can be written as 灬 (as in 黑).

- When 犬 appears on the left, it can be written as 犭(as in 猪).

These are just a few examples from a longer list. Like written English’s “i before e except after c”, these rules tend to have as many exceptions as they do examples.

Because some radicals change form when they change form (i.e. 火 => 灬, as seen above), the IDS rules are flexible. You could write ⿱占火 to mean 点, but you could also choose to specify ⿱占灬 = 点.

The Long Tail⌗

There is a long tail of characters in the CJK Unified Ideographic Extension blocks which (a) have a Unicode representation, but (b) don’t yet have a rendering in most fonts. A font which fails to implement CJK Unified Ideographic Extension blocks B through G is missing roughly 65,000 characters. 7 This gulf mirrors the difference in characters between a standard Chinese dictionary and a “complete” Chinese dictionary. These characters are disproportionately likely to be proper nouns, place names, and other rare words.

AutoCJK⌗

I wrote and published AutoCJK a tool which can predict what composite CJK characters should look like. These predictions take the form of low-resolution raster images, which, while unsuitable for use in fonts, can be a useful input for a font design tool (more to come on that later).

Generative adversarial networks (GANs)⌗

AutoCJK uses a GAN which is based on Pix2Pix. Pix2Pix is a generative adversarial network (GAN). In a GAN, two neural networks train to fool each other: one network trains to produce accurate, realistic examples like those in the dataset in order to fool the discriminator, which trains to more accurately differentiate between real elements and the generated examples. Once a GAN is trained, the generator can be used to produce novel outputs similar to, but not the same as, those in the input dataset. GANs are often used to create images.

Thinking about faces actually led me to the approach of using a GAN. Faces and CJK characters are similar in a few ways: both are roughly visually balanced, both are easily recognizable, and both have both macro- and micro-scale features which contribute heavily to their apparent realism. When it comes to computationally generating new faces, even a tiny mistake can betray an image’s inauthenticity.

In short, faces and CJK characters both seem to follow subtle rules which don’t cleanly fit into an imperative algorithm but which nonetheless can be generally predicted.

Generating custom training data⌗

I fed the GAN a custom dataset8 of images of CJK characters and their decompositions.

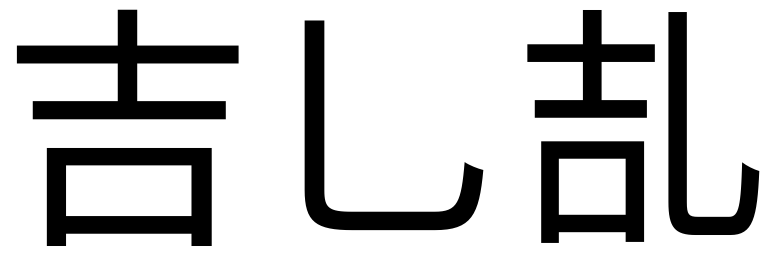

Take U+3416 㐖 as an example.

This character can be decomposed as ⿰吉乚; it is a vertical composition of U+5409 吉 and U+4E5A 乚.

When I trained the GAN on a particular font, I rendered all three characters in that font and produced an image with 吉, 乚, and 㐖 side-by-side:

I refitted the Pix2Pix tutorial to accept a 256x256x2 input image and predict a 256x256x1 output image.

The input image was two 256x256 greyscale bitmaps of the full-width input characters. In the example above, picture the superposition of the first two thirds of the image, i.e. the segments containing

吉and乚.By “superposition” I just mean that there were two layers to the image. (These are layers you could use to hold red / blue / green in a color image, for example.)

The output image was one 256x256 greyscale bitmap of the full-width output character. In the example above, picture the right third of the image, containing

㐖.

The vast majority of the Pix2Pix model was unchanged in AutoCJK’s model.

Training and using a model⌗

The v1 model currently published as a part of https://github.com/google/autocjk was trained for around 48 TPU-hours.

This model is definitely not perfect. The rasters it generates have rough edges, and the proportions and kerning between the components are biased towards the styles of the fonts I trained it on (in this case, zh-CN). Over time I will revisit this model and experiment with loss functions, higher and lower resolution predictions, etc.

The model was trained on a large number of Noto CJK fonts, but is by no means limited to predicting outputs given inputs from that particular font. I found cachebusting (testing with inputs not in the training data) very useful while building confidence in this model.

In some cases this meant rendering character pairs not in the training data. In other cases this meant using fonts not in the training data. The thumbnail image is actually trained from glyphs downloaded from GlyphWiki.

For instructions on installing and running AutoCJK, see the README.md and the installation wiki.

Acknowledgements⌗

I would be remiss if I didn’t mention Andrew West (魏安)’s dataset which offers per-character decomposition for all 92,856 CJK unified ideographs defined in Unicode version 13.0. This extraordinary resource powers the character de/composition algorithm which underlies the training image generation utility.

Kangxi radicals were developed as an indexing system in the 1600s, but Chinese indexing systems are at least two millennia old. ↩︎

]: Sometimes (but not always) the left half of a character has some semantic hint at the meaning, and the right half of a character has some phonetic hint at the pronunciation. ↩︎

In Unicode, characters which are used across many East Asian languages are broadly termed CJK, which stands for Chinese-Japanese-Korean. ↩︎

See https://en.wikipedia.org/wiki/CJK_Unified_Ideographs. ↩︎

See https://github.com/googlefonts/noto-cjk/issues/13 and https://github.com/adobe-fonts/source-han-sans/issues/222. ↩︎

The characters in these extension blocks are unevenly distributed across traditional and simplified Chinese scripts as well as Japanese, Korean, Vietnamese, and other East Asian scripts. ↩︎

You can generate training data like it with

make_dataset.pyand some local fonts of your own. ↩︎